Kristen Molyneaux, Vice President, Social Impact, Lever for Change, shares insights from the Peer-to-Peer Review process for the 100&Change competition.

In almost every conversation regarding the second round of 100&Change, we are asked, “what did you learn during the first round of the competition, and what changes have you made based on those learnings?” Of the many findings in our learning and evaluation report, one of the most illuminating was that many judges felt they spent valuable time reviewing non-competitive proposals.

Recognizing that our Wise Head Panel of external judges volunteer their time and expertise to 100&Change and that they worked diligently and with great empathy to provide valuable feedback on each proposal they reviewed, we sought ways to elevate only competitive proposals to our judges. It was also critical to stay true to our core values of openness and transparency, while relying on outside experts to help us advance the best ideas to our Board.

In searching for a solution, our competition partner, Common Pool, recommended that we try using an applicant-to-applicant or Peer-to-Peer Review process. This would be an added step between the administrative review, which assesses the basic eligibility of applicants, and the Wise Head Panel. We saw the Peer-to-Peer Review as providing several advantages for both applicants and judges.

For applicants, we hoped that the peer review would provide three overall benefits. First, we anticipated that active participation in the review of other proposals would provide a greater understanding of the scoring rubric and competition criteria. Secondly, we hoped it might provide applicants an opportunity to identify new potential partners. Anecdotally, we heard from some MacArthur grantees who participated in other competitions that used a peer review that they connected with prospective partners after reviewing compelling proposals. They also said they found new, and sometimes more persuasive, ways to frame their own work by reviewing others’. We wanted to see if this experience would hold true within the 100&Change competition.

Finally, we wanted to increase the number of proposals that would receive feedback. In the first round of 100&Change, only 801 applications made it through administrative review out of a total of 1,904 received. This meant that 1,103 applicants did not receive any substantial feedback—a disappointment for us as well as the organizations that submitted proposals. In the second round of 100&Change, we wanted to ensure that more organizations received feedback. By using a Peer-to-Peer Review, coupled with the organizational readiness tool designed to help organizations better assess their eligibility, we hoped that a larger set of eligible organizations would move beyond the administrative review process and receive feedback on their work, even if their proposal did not advance to the Wise Head panel.

For judges, we wanted to ensure that they spent more time giving substantive feedback on ideas that were likely to be competitive in the overall competition.

Results from 100&Change Peer-to-Peer Review Process

From our overall pool of 755 applications—475 proposals made it through this year’s administrative review process. You can read more about the pool of applicants in Cecilia Conrad’s Perspectives piece, “A Promising Start: 755 Proposals.” Each of the 475 valid proposals moved forward to the Peer-to-Peer Review phase to be reviewed by five organizations working within similar domains to the applicant. The scores of all five reviews were then normalized using an algorithm to ensure a level playing field for all applicants.

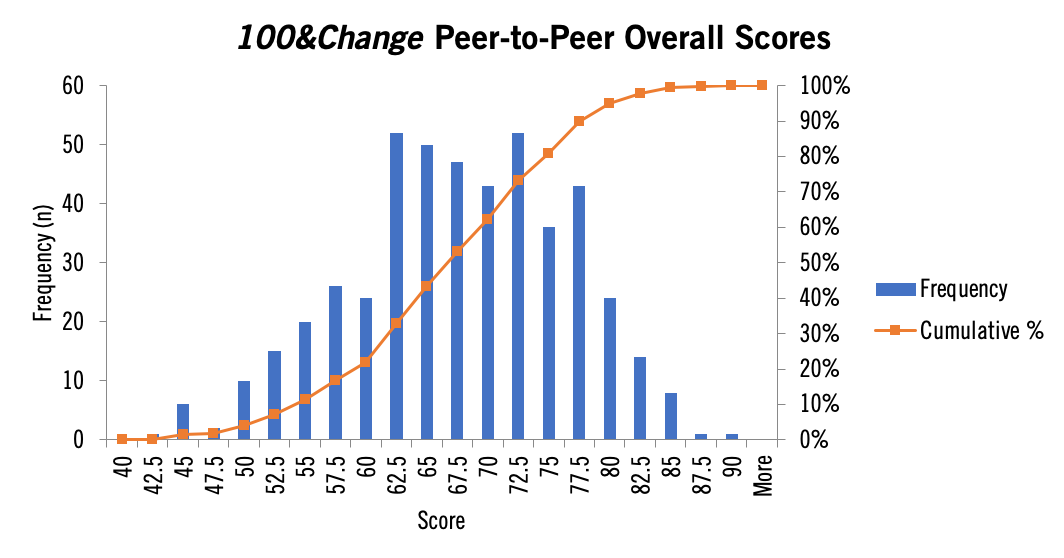

The normalized results for the peer scores are shown in the distribution table below. For this round of 100&Change, we set the cut-off point for organizations to move forward at a score of 50 out of 100. Nineteen proposals scored 50 or below, and an additional three applicants did not move forward because they did not complete the peer reviews assigned. Therefore, 22 of the 475 applications, or 4.6 percent, did not move forward to the Wise Head panel.

By Kevin Drucker, Manager, Quantitative Analysis and Risk Management, MacArthur Foundation

Anonymized feedback from the peer review process will be released to applicants. Overall, we have been pleasantly surprised by applicants’ positive reception to the Peer-to-Peer Review. We held multiple training sessions to answer questions about the process and to help applicants understand our rationale.

While we have yet to do a systematic assessment of all of the feedback provided during the Peer-to-Peer Review, the comments we have reviewed have been thoughtful, insightful, and supportive of applicants’ work. In the words of one reviewer who reached out to us to inquire whether he could connect two of the applicants whose proposals he reviewed, “What an authentic way to gain a rarified glimpse into the rest of the world.”

We hope that many other applicants found this exercise equally inspiring and insightful.