Kristen Molyneaux, Vice President, Social Impact, Lever for Change, shares results from a survey of judges in the 100&Change Wise Head Panel.

As part of our ongoing commitment to learning, we surveyed our Wise Head Panel of external judges about their experience evaluating 100&Change proposals. We appreciate that judges take time out of their busy schedules to evaluate, rate, and provide valuable feedback to applicants. Their input allows us to run an open and transparent competition.

In a recent blog post, Cecilia Conrad described the diversity of this year's Wise Head Panel. Here we provide some of their insights on the overall judging process.

Building off learnings, including judges’ feedback, from the inaugural 100&Change competition, we added two steps prior to applications arriving in the judges’ inbox. We created an organizational readiness tool and developed a Peer-to-Peer Review process. Both were designed in response to concerns we heard from first round 100&Change judges, who felt they spent too much time reviewing proposals that were unlikely to be competitive.

Additionally, we created a “smart application” with links to resources and tools, such as creating a theory of change, budgeting for inclusion, and frameworks for how to scale projects. These resources were intended to help organizations strengthen their application. We hoped that adding these various components would reduce the burden on our panel of wise heads while still delivering a competitive group of proposals.

In total, 153 of our 293 Wise Heads responded to our post evaluation survey. Overall, 62 percent of respondents stated that they felt the proposals were of good quality, and 27.5 percent felt the proposals were of very high quality. Only a small percentage of respondents (10.5 percent) felt that the quality of proposals could have been stronger. Judges noted two particular areas where applicants need to provide greater clarity—specifics on the project’s activities and accurate budgeting. We can continue to hone the application in these areas for future rounds of the competition.

“The applications were often vague about what specifically [the organization] proposed to do. [Consider adding] a question…"what specifically do you propose to do?" It was [also] hard to know what made this approach unique compared to others in the field. [Consider adding] a question about this.—Judge's comment

Seventy percent felt the second-round proposals were stronger

Of the 153 judges that took the survey, 79 were returning. We asked them whether they felt the quality of the proposals increased between the first and second rounds of the competition. Seventy percent felt the second-round proposals were stronger, while 24 percent felt they were about the same, and 3 percent felt that the quality decreased. This shows that while we still have room for improvement, the additional steps added to our process increased the overall strength of the applicant pool.

“The improvements you instituted this time streamlined and clarified my work. The sequence of the proposals, the focus imposed by limits, the crisper questions helped a lot on the proposal side.”—Judge's comment

Several judges noted that they felt non-competitive applications still made it to the Wise Head review process. In future rounds, we will rethink the cut off score for applications advancing to this stage of the competition and continue to build processes that focus on strengthening applications.

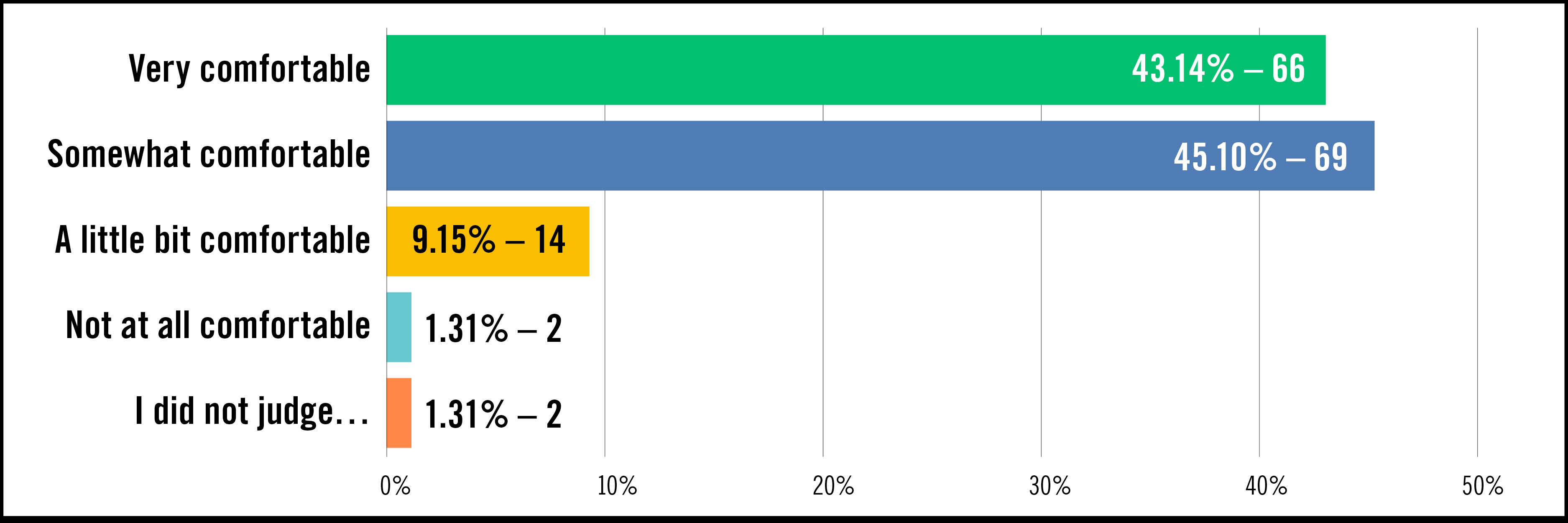

In our process, judges are randomly assigned proposals across multiple domains. Due to this, we are frequently asked about the judges’ level of comfort reading proposals outside their area of expertise. Now we have evidence to back up what we heard anecdotally—our survey shows that 88 percent of judges stated they were very or somewhat comfortable evaluating applications outside their domain. While some would still prefer closer alignment to their area of expertise, others embraced the lack of familiarity.

“It was a good first experience. I was surprisingly quite comfortable judging proposals outside my thematic area. I guess a good proposal stands out.”—Judge's comment

Judges' Comfort Evaluating Outside Their Expertise

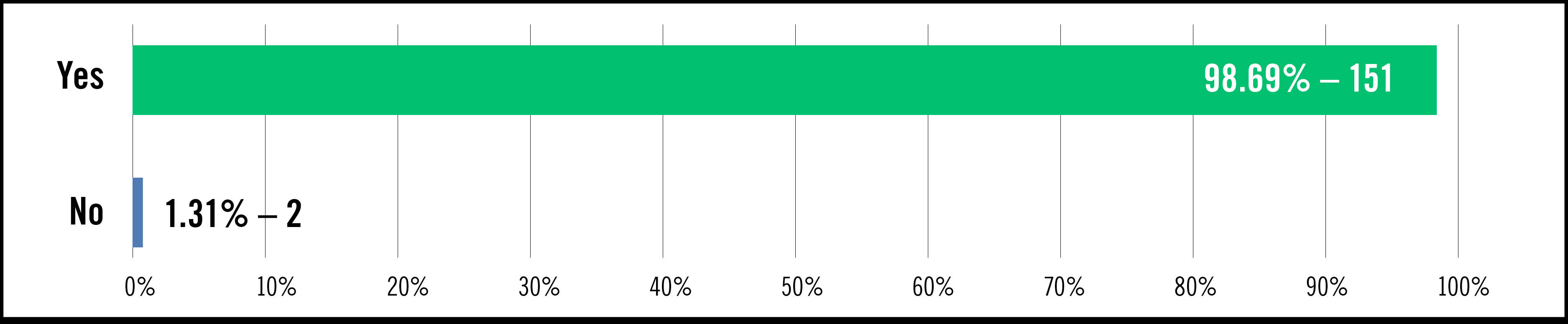

One of the most important questions that we asked judges is whether they would be willing to judge future rounds of applications. This allows us to get a sense of the overall satisfaction with the process and whether they enjoyed participating in 100&Change. We are happy to report that an overwhelming number of respondents, 98.6 percent, stated they would be interested in participating as a judge in the future.

And they offered insights on how to make judges feel more comfortable with the process the next time around, including: more information on the breadth of due diligence activities outside of their review, creating a full timeline of the judging process, and providing examples of strong reviewer feedback. We intend to take all of these under consideration as we continue to strengthen our process.

Interest in Judging Future Competitions

We are honored that so many people from around the world are willing to participate in 100&Change. Their efforts, insights, and perspectives help make 100&Change a stronger competition for all of those involved. We are thrilled to see that our improved and enhanced process created a better experience, and we thank all of our judges for lending their expertise and time to this endeavor.