Long before we solicited applications for 100&Change, we had to figure out who would judge the proposals we expected to receive and what criteria should be used.

We had a lot of spirited discussion as we considered three different models.

The first method we considered was a crowdsourcing model. There is wisdom in people proposing which problems they would solve and having a crowd assess through open voting whether it is meaningful or compelling. But we realized there were also some constraints with this approach. We did not want 100&Change to turn into a popularity contest, creating a competitive disadvantage for some proposals. We worried that open voting might favor emotional appeal over effectiveness or razzle-dazzle technology over simple solutions.

The second approach, at the other extreme, was the specialists’ panel model. The idea here is that we would define a field of work and then identify experts in that discipline who we could approach to evaluate applications. We expected that specialists would be less vulnerable to sentiment and pay closer attention to proof that a solution worked. But once we determined no single field or problem area would be designated as part of the 100&Change competition, it became clear this approach wasn’t compatible with an open call for applications. There was also a sense that experts in a certain field tend to struggle with new ideas that come from outside of their discipline.

We realized crowds provide a way to take more risks, innovate, and think outside the box. We also know the wisdom of experts is important. So, what if we created a crowd of wise experts?

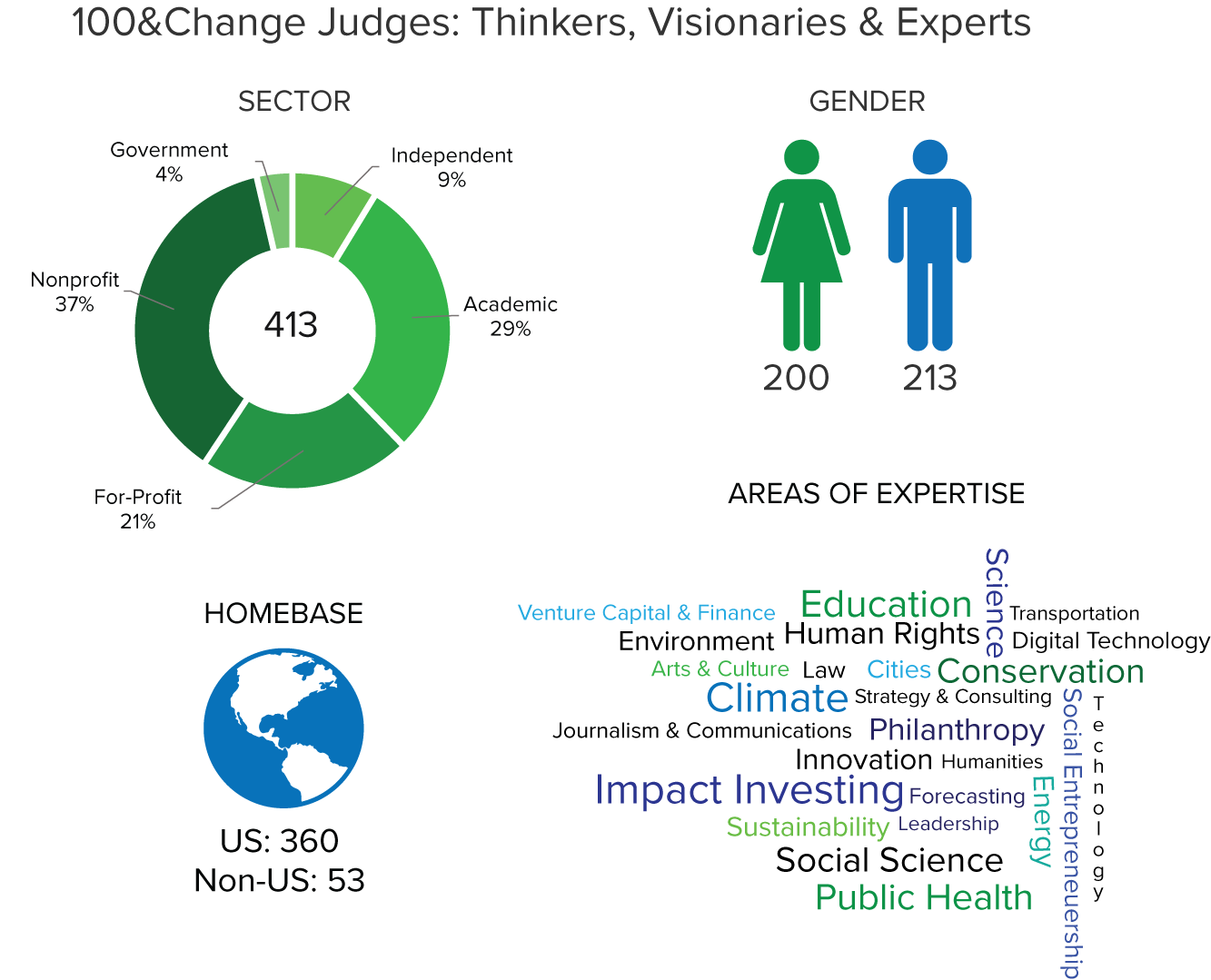

“A panel of wise heads,” someone on the Foundation’s Board of Directors called it. So, that’s what we went for when we recruited judges. We got a cross section of experts from different sectors – nonprofit and for-profit, academia and government were all represented. We tried to get all kinds of diversity. We consciously recruited people who were either outside of the United States or who had significant life experiences outside of the U.S. More than 50 were internationally-based.

We ended up with an evaluation panel of judges that included more than 400 thinkers, visionaries, and experts in fields that included education, public health, impact investing, technology, the sciences, the arts, and human rights. Our panel was nearly evenly split between women and men. And 77 were people of color.

This notion of broad judging panels is not completely new. It has been used before in sector specific competitive evaluations. And the former Global Business Network pioneered a model that brought together a network of experts from a range of fields to provide their perspective on emerging trends, opportunities and challenges. We believe this is the first time a competition has been evaluated using a wise head panel of judges on this scale.

Rather than having our judges review submissions based on their field of expertise, we randomly assigned proposals and asked them to determine whether projects were meaningful, verifiable, feasible, and durable based on their broad knowledge. Each application was judged by a panel of five experts.

One of the disadvantages of our approach is that we asked extremely busy people to volunteer their time. We asked them to make a commitment well in advance of when they would actually need to review applications. So, it is understandable that we lost some reviewers along the way. One lesson learned is even with several hundred judges, we still needed to build excess capacity.

There are still lots of questions we are going to ask ourselves about our evaluation method. For example, are judges who lack technical expertise in a field more or less likely to rate a proposal in that category highly? We will do some analysis to determine what biases are embedded in this approach.

With every step of the 100&Change competition we are soliciting feedback, including from our panel of judges, some of whom will share their thoughts on the blog soon. We believe being open and responsive will lead to a better outcome: more interest in solutions that can work and even stronger proposals in the future that might just pay off for society.