Movement Alliance Project exposes bias in algorithmic decision making tools and advocates for limitations, oversight, and accountability on their use.

Scooping up countless bits of data, predictive computer models make projections behind the scenes about what products people will buy, how they will vote, who they might want to date, which TV shows they will watch, and more.

The process is known as algorithmic decision making, or ADS. It is also used to guide judgements that can change lives forever—whether a person is likely to commit a crime, for example, or whether their children should be taken from them.

“As tools of algorithmic decision making appear in every field of our lives, it’s going to be critical that communities understand what these tools are,” said Bryan Mercer, Executive Director of Movement Alliance Project (MAP). The Philadelphia nonprofit unites community organizations around initiatives where technology, race, and inequality intersect.

“Our communities should not allow a computer to be the cloak that hides responsibility institutions of power have when making decisions about human life,” he said.

Risk assessment tools, a type of ADS, are intended to help reform criminal justice and other areas by removing human bias from decisions. But activists, attorneys, and data scientists warn that the software can perpetuate inequality.

“These tools take decades of bias and turn it into math,” said Hannah Sassaman, MAP Policy Director. She challenges the notion that local governments need artificial intelligence to decide questions such as who can be released from jail safely before trial.

Experts’ primary concern is that, in many cases, data fed into the software that produces risk scores is tainted. How? It is gathered through years of unfair housing programs, discriminatory policing, and similar inequitable practices that hurt communities of color and portray them negatively.

As a result, those systems can inaccurately conclude people of color pose high risks.

Cataloguing Widespread Risk Assessment

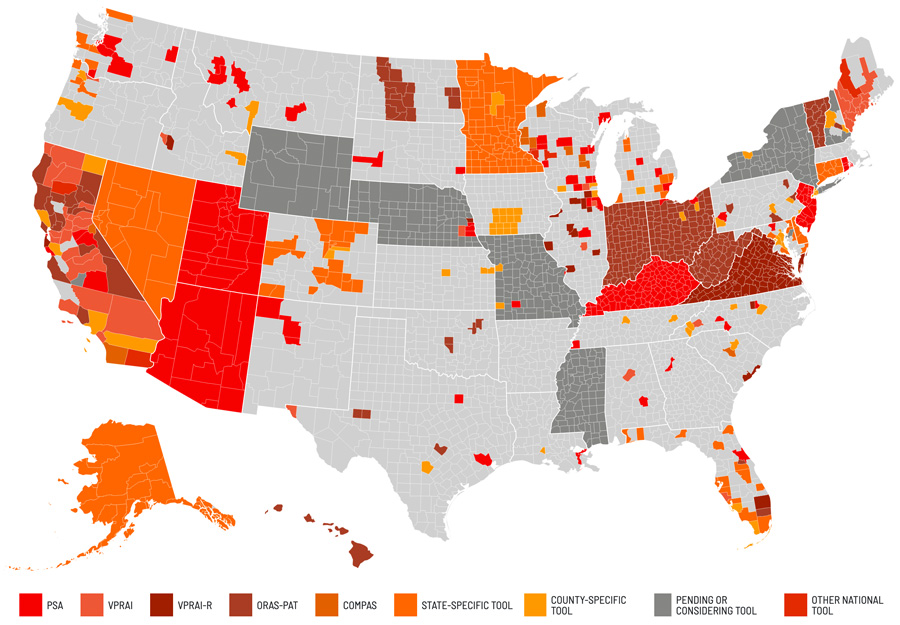

To address concerns over algorithm bias, MAP partnered with MediaJustice, a nonprofit working for more equitable media and technology. Their three-year investigation, Mapping Pretrial Injustice, found that more than 1,000 counties in 46 states and Washington, D.C., used the tools.

In addition, their work showed that most of those jurisdictions do not evaluate whether the tools lower jail populations or address racial disparities. The two organizations also released a database cataloguing for the first time how widespread risk assessment tools are in pretrial decision making, and linked communities with resources on confronting risk assessment.

Movement Alliance Project partnered with MediaJustice to develop a map showing where pretrial risk assessment tools, used to determine if someone awaiting trial should be incarcerated, are in place.

Sassaman also leads MAP’s preliminary work examining ADS’s impact in the child welfare system.

“We are in the first stages of sketching out how data scientists, researchers, and community organizers can work together to build power for parents to interrupt the surveillance and family separation endemic to this system,” Sassaman said.

Technology and people’s empowerment have been central concerns for MAP since its founding in 2005. It pressed Comcast to expand internet access to Philadelphia low-income residents in 2016. MAP also has challenged police surveillance and the use of facial recognition technology, another algorithmic tool with built-in bias against people of color.

In addition, MAP has supported low-wage laborers in achieving better working conditions, confronted gentrification and displacement, and assisted undocumented immigrants pursuing citizenship.

Movement Alliance Project participated in a Day of Action on May Day 2017 near Philadelphia City Hall.

Data Scientists’ ‘Moral Compass’

The use of algorithms in predictive computer programs dates back decades. As computing power increases and mountains of data become more accessible, the tools have proliferated in health care, lending and banking, social services, and hiring.

Proponents say the algorithms are more transparent and unbiased than the human mind. Skeptics include data scientists and MAP’s Mercer, who contend that risk assessment tools allowed bias “to become hidden even deeper in criminal legal proceedings,” exacerbating adverse outcomes for people of color.

Kristian Lum, assistant research professor at University of Pennsylvania known for her work on fairness in algorithms and machine learning, said the issue “has become a very hot topic in the field of data science and machine learning.”

MAP, she said, is a “moral compass” for data scientists.

Aside from concerns about how the raw data is gathered, Lum said the technology may discourage policy makers from asking bigger reform questions about whether jurisdictions need to detain so many people before trial, for example, or whether investing more in under-resourced neighborhoods would be effective in lowering crime and advancing justice.

“Instead of a radical rethinking of how things are done,” she said, “we’re just doing the same old things but with a computer.”

Dropping Risk Data Tools

In an August 2020 Wired magazine column, Sassaman wrote that the reduction in jail populations during the COVID-19 pandemic—with no corresponding increase in crime—proves that reforms such as ending mass incarceration and cash bail are possible without the technology.

MAP and its allies believe that data-driven pretrial incarceration should be a last resort.

The community organizations Sassaman assembled have helped persuade policy makers and governments, including those in Philadelphia, to drop the tools or delay adopting them. Also, some cities have outlawed predictive policing and issued moratoriums on facial recognition technology.

Those moves are occurring amid a shifting national view on risk assessment tools that MAP helped spark. In 2018 the organization began working with the Leadership Conference on Civil & Human Rights. The collaboration yielded a policy statement opposing the use of the tools in ending cash bail. More than 100 groups signed it.

Soon after, several nonprofits supporting the development of the technology reversed course, said Sakira Cook, director of the Justice Reform Program at the Leadership Conference.

The statement also laid out principles for governing the technology, which activists are using to press for safeguards, transparency, and accountability in places where forcing an end to the practice is unrealistic.

“In some places, we can’t beat it back,” Cook said. “So, we need to give people a road map for mitigation.”

MacArthur provided the Movement Alliance Project, under its previous name, Media Mobilizing Project, with $770,000 through two grants in Technology in the Public Interest in 2019.